In The Vibe Coding Handbook: How To Engineer Production-Grade Software With GenAI, Chat, Agents, and Beyond, Steve Yegge and I describe a spectrum of coding modalities with GenAI. On one extreme is “pairing,” where you are working with the AI to achieve a goal. It really is like pair programming with another person, if that person was like a “summer intern who believes in conspiracy theories” (as coined by Simon Willison) and the world’s best software architect.

On the other extreme is “delegating” (which I think many will associate with “agentic coding”), where you ask the AI to do something, and it does so without any human interaction.

You delegate when you’re sure the task novelty is not terribly high, when the AI has demonstrated successes with these types of tasks in the past, when the person (and the AI) has the appropriate level of skill, and when the consequences should something go wrong are not terribly high.

These dimensions dictate the frequency of reporting and feedback you need. (If these sound familiar, these are the dimensions that Dr. Andy Grove defined in his book, High Output Management, when he described the frequency of reporting needed for people!)

What is interesting to me is the conditions under which you can get away with “extreme delegation.” When you delegate a mission to someone with a tremendous degree of latitude and autonomy, you likely grant them the ability to execute something with little oversight, little and infrequent communication, and a great deal of trust.

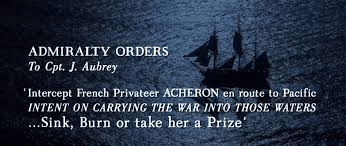

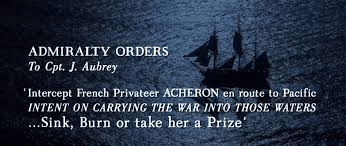

One domain that has studied this in tremendous detail is military command, especially naval command. In the movie, Master and Commander: Far Side of the World, set during the height of the Napoleonic Wars, it’s pretty astonishing that the entirety of Captain Jack Aubrey’s orders from the British Admiralty were simply this: “Intercept French Privateer Acheron en route to Pacific, intent on carrying the war into those waters. Sink, burn, or take her as a prize.” (The movie is based on a series of novels by Patrick O’Brian.)

That order, supposedly given around 1805, reflected the reality of communications at the time. Captains at sea could not communicate with the Admiralty until they got back to port, which could be well over a year. In fact, long-distance voyages to India or the Pacific would typically last multiple years!

Naval commanders during this period operated with astounding levels of autonomy. Their mission orders were notably brief, demonstrating the high level of trust and discretion granted to them. This approach reflected the Admiralty’s command style during the Napoleonic Wars—an era characterized by significant delegation of authority and decision-making power.

I was watching with my family the recent Hulu adaptation of James Clavell’s Shōgun. While not central to the plot, the part that really caught my attention was the Portuguese “Black Ship” commanded by Captain Ferreira. This vessel was inspired by the historical “Kurofune” ships of the late 1500s that transported incredibly valuable cargo between Macau and Nagasaki, which capitalized on Portugal’s trade monopoly throughout Asia.

Thanks to repeated chat sessions with ChatGPT, I was shocked to learn that these ships were among the most valuable cargo ships in the world. Each voyage transported silk, porcelain, spices, gold, and silver worth approximately $300-500 million in today’s USD—potentially approaching $1 billion when accounting for the enormous profit margins these luxury goods commanded in Japan.

To put this into perspective, the largest VLCC oil supertankers carry oil valued at “only” $160-300 million.

But what is astonishing is how much more authority Captain Ferriera has than even these VLCC supertanker captains. (Steve Yegge, upon reading this list, remarked that any program manager in a large organization will likely find these levels of responsibility familiar.)

| Authority Domain | Captain Ferreira (1600s Black Ship) | Modern Supertanker Captain |

|---|---|---|

| Route Planning | Moderate authority—followed established trade routes but could make tactical deviations for weather or safety. | Limited authority—follows predetermined routes with minimal deviation allowed. |

| Cargo Decisions | Limited authority—cargo selection and pricing determined by Portuguese trade officials in Macau. | No authority—cargo decisions made entirely by corporate headquarters. |

| Combat Decisions | High authority—could decide how to engage pirates or rival traders. | Limited authority—follows predetermined security protocols, relies on naval escorts. |

| Trade Negotiations | Limited authority—operated within predetermined trade agreements, could not establish new ones. | No authority—no role in trade negotiations. |

| Reporting Requirements | High accountability—required detailed reporting to Jesuit and Portuguese authorities. | High accountability—constant monitoring via satellite and communication systems. |

| Scheduling Autonomy | Limited flexibility—annual voyages with expected arrival windows. | No flexibility—precise scheduling coordinated with ports, refineries, and corporate logistics. |

Last night, I asked ChatGPT to speculate on what Captain Ferriera’s orders were. It responded:

“Deliver the cargo to Nagasaki. Ensure the Portuguese monopoly remains unchallenged. Neutralize any Dutch or English threats. Protect the Jesuit mission. Avoid war with the daimyōs unless absolutely necessary.”

Note how these orders are short and strategic, similar in style to Captain Jack Aubrey’s orders in Master and Commander. Given that no further communication with the Viceroy of Goa or Lisbon was possible, Ferreira would have operated under standing orders issued before departure from Macau or Goa.

Normally, I might be quick to dismiss this as fanciful, but apparently, US naval commanders going into war zones often have similarly short orders, along the lines of: don’t start a war, don’t lose your ship, and don’t get your sailors killed.

So think about this when you think about delegating missions to AI. Compare and contrast to what degree you understand:

- Task Novelty: How well-defined is the task? Has it been done before?

- Past Experience: Has the person (or AI) successfully done this task before?

- Skill Level: How competent is the person (or AI) at handling this type of work?

- Task Size & Impact: How critical is the task? What happens if it’s done incorrectly?

- Frequency of Reporting: How often do you need feedback or updates to ensure success?

Thinking Through AI Delegation vs. Pairing

Below is some AI-generated guidance on when full delegation is okay versus active pairing versus consulting the LLM as an expert.

Full Delegation (Rare)

- When appropriate: Simple, low-risk tasks with clear success criteria.

- Example: “Format this JSON data.”

- Risk level: Minimal

- Monitoring needed: Light review of results

Guided Delegation (Common)

- When appropriate: Medium-complexity tasks that have clearly defined boundaries.

- Example: “Add logging to these functions but don’t modify their behavior.”

- Risk level: Moderate

- Monitoring needed: Regular checkpoints, review key decision points

Active Pairing (Most Common)

- When appropriate: Complex, ambiguous, or high-risk tasks.

- Example: “Let’s debug why cards sometimes move to the wrong list.”

- Risk level: High

- Monitoring needed: Continuous oversight, frequent course correction

Expert Consultation (Always Available)

- When appropriate: When you need ideas or approaches rather than implementation.

- Example: “What are some approaches to solve this event synchronization problem?”

- Risk level: Varies

- Monitoring needed: Your judgment becomes the filter

Signs You’ve Delegated Too Much (har har)

From my experience with Claude Code, here are warning signs that you’ve given it too much autonomy:

- Scope creep: It’s solving problems you never asked it to solve.

- Unfamiliar patterns: The code it’s writing doesn’t match the style or approach of your codebase.

- Deep nesting: You’re seeing increasingly complex structures (like deeply nested try/catch blocks).

- Performance degradation: Simple operations become slow or resource-intensive.

- The “horror room” feeling: You come back to find your codebase transformed in unexpected ways.